What is the Googlebot Simulator Online Tool?

A Search Engine Crawler Simulator by Sitechecker is an online tool designed to analyze a website to determine how it is crawled by search engines like Google. The primary focus of such a tool is to identify and diagnose any issues that might prevent a website’s pages from being properly indexed or ranked by search engines.

The Googlebot Crawl Simulator can help you in many ways

Availability for crawling: check ‘nofollow’ links and pages marked ‘noindex’, consider URLs with parameters for a comprehensive analysis, review all images for SEO optimization, process and assess JavaScript for dynamic content accuracy, check CSS for potential display problems, and analyzes external links.

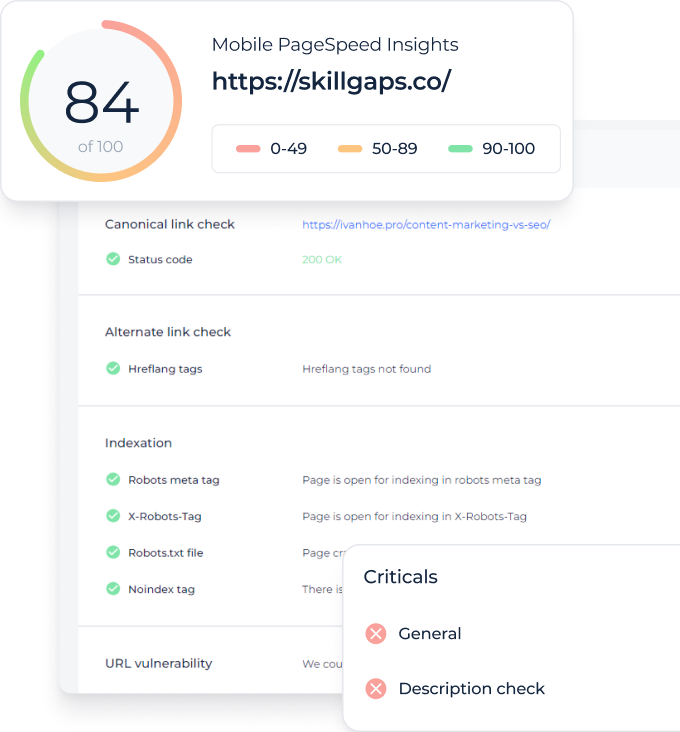

Detailed reports, derived from comprehensive crawling: display blocks of verified data categorized by SEO factors, each with its corresponding percentage score. These factors cover critical areas of SEO, such as Links, Indexability, Localization, Content Relevance, XML Sitemaps, Page Speed, Mobile Friendliness, Security, and more. The visual representation affords a swift and clear overview of the website’s SEO performance against these key benchmarks.

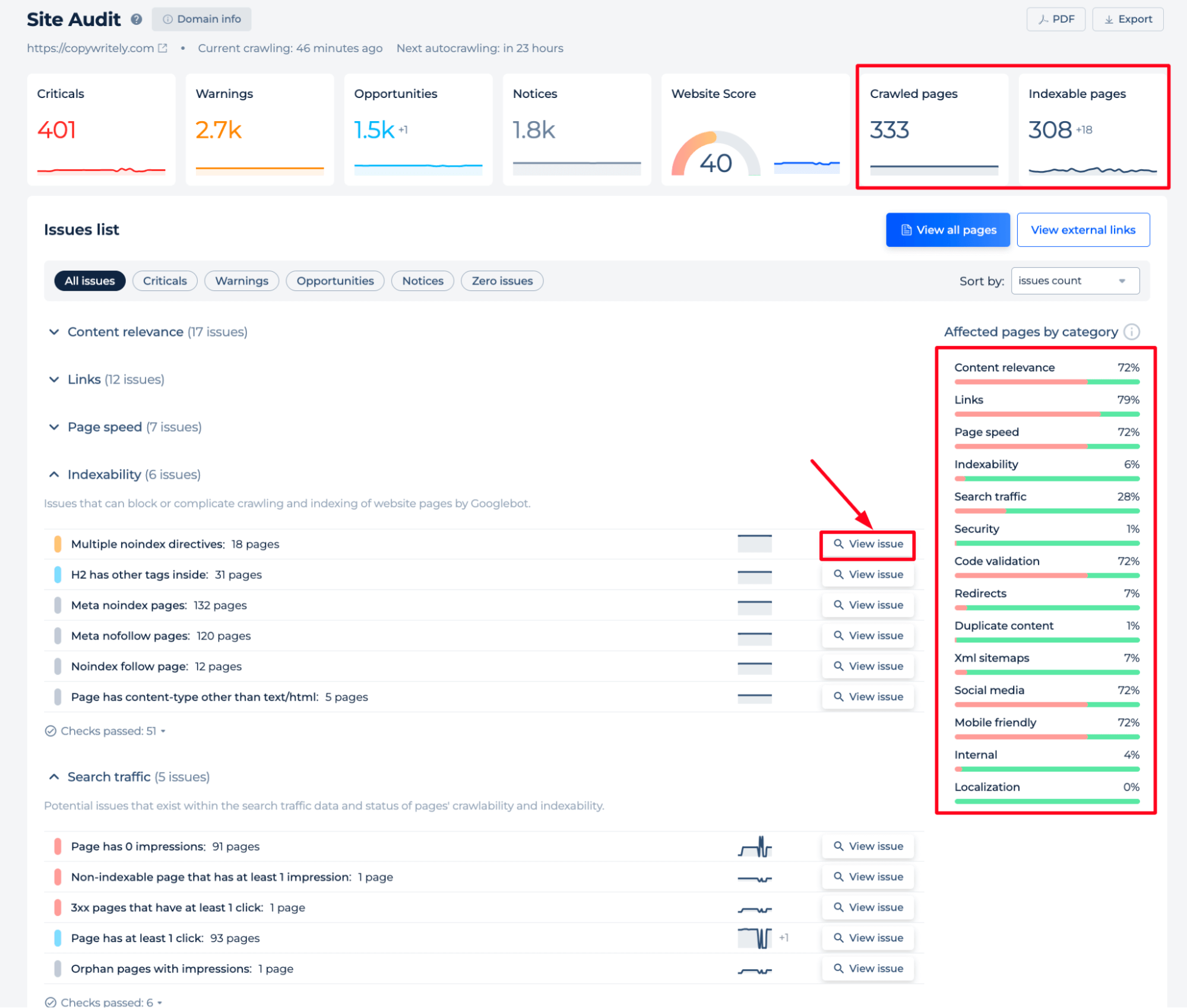

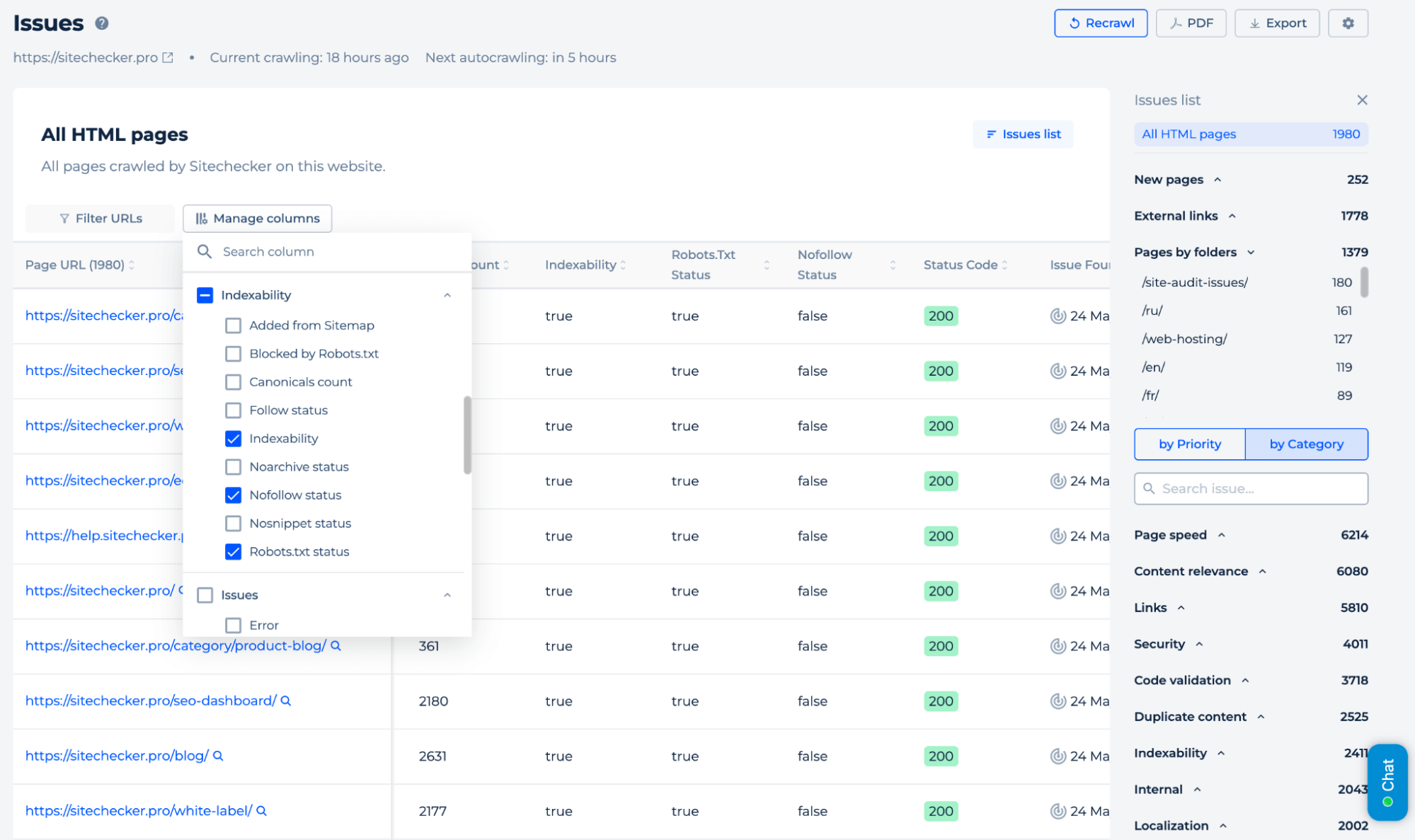

Deep analysis of the collected data: obtain precise information crucial for enhancing SEO efficiency or preventing potential problems. Configure the data output to include comprehensive details from all pages, such as status codes, meta tags, robots.txt directives, word count, and the severity of errors for prioritized resolution. Additionally, the tool offers the capability to assess the technical health and optimization of each page individually.

Simulating the Googlebot: Our tool replicates the functionality of a search engine crawler for each page and the site as a whole, mimicking the Google spider’s process to identify any errors it would detect.

Key features of the tool

Unified Dashboard: aggregates data and insights from various SEO metrics, providing a cohesive view of your website’s performance. It allows for efficient monitoring and streamlined decision-making.

User-friendly Interface: designed with a focus on user experience, featuring an intuitive interface that simplifies navigation. It’s accessible for users of all skill levels, from beginners to advanced SEO professionals.

Complete SEO Toolset: offers an extensive collection of SEO tools, covering everything from keyword research and backlink analysis to on-page audits and rank tracking. This comprehensive toolset enables in-depth website analysis and the execution of effective SEO strategies.

How to Use the Google Crawler Simulator

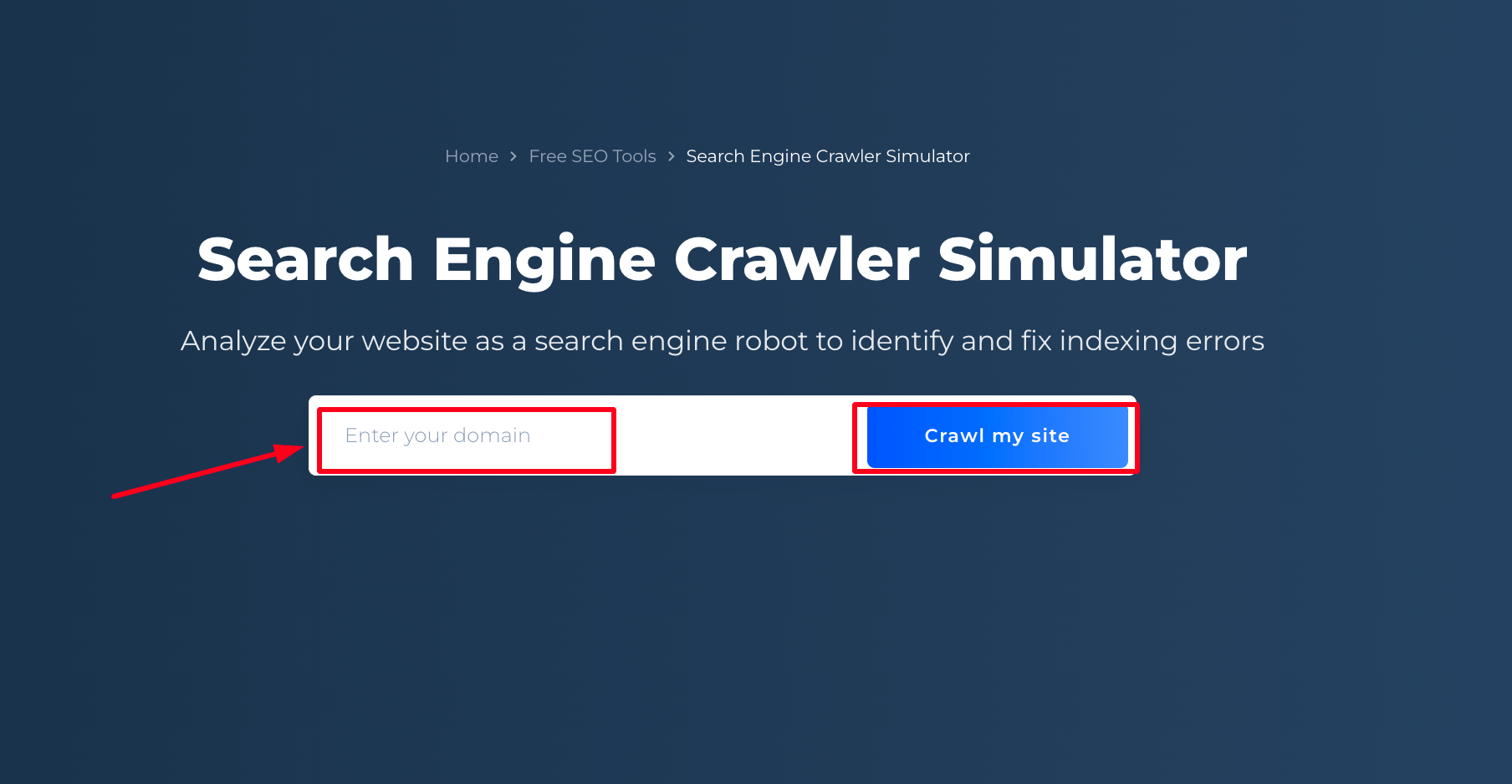

The Spider Simulator Online Tool enables you to view your website from the perspective of search engine bots, identifying errors that could impact your site’s rankings. By detecting and addressing these errors before they are found by search engine spiders and improve your site’s performance in search results. Checking is straightforward. Let’s begin!

Step 1: Enter a website domain address

Step 2: Get the results

The Google Search Simulator Tool provides a comprehensive audit, identifying a variety of errors that search engine spiders may detect, potentially affecting your SEO ranking. It uncovers issues such as incorrect settings and content indexing problems, including the misuse of ‘no index’ directives and ‘nofollow’ attributes within page metadata. Additionally, it spots duplication of meta tags and content across multiple pages, identifies broken pages and improper redirects, evaluates mobile compatibility, and checks sitemap configurations and robots.txt files, among other elements critical to SEO performance. Each identified issue is an opportunity to improve the site’s visibility and ranking in search engine results.

You can also configure the data output to suit your current optimization needs, independent of the criticality of detected errors:

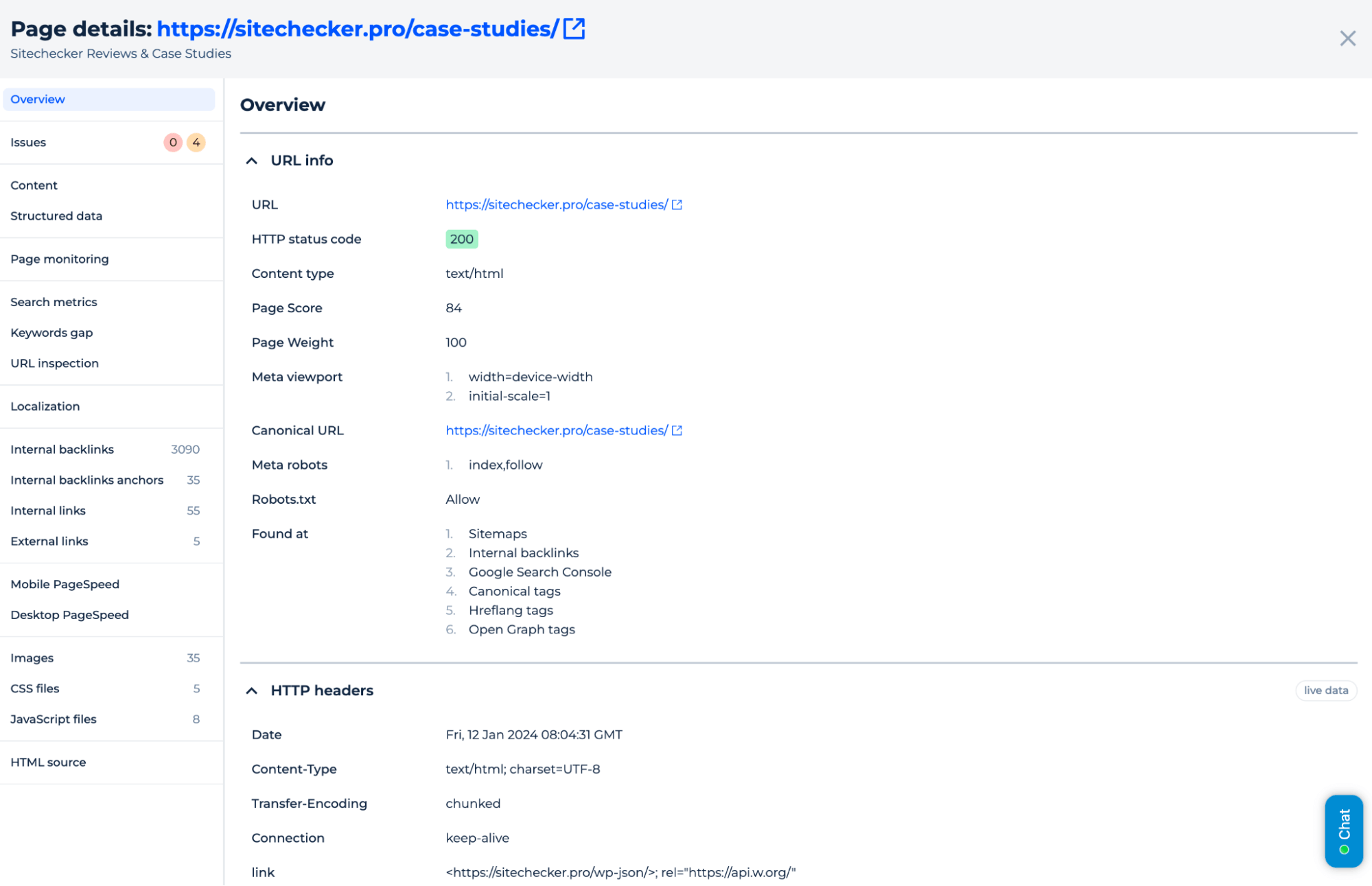

The Googlebot Simulator feature provides a detailed page analysis as seen by Google’s crawling algorithm. It displays crucial SEO metrics, including the page’s HTTP status, content type, and the SEO scores for page quality and content weight. Meta-information analysis covers viewport settings, canonical links, and robots directives, ensuring that the page is correctly optimized for indexing. The tool also checks the page’s visibility across various SEO platforms and tags, such as sitemaps, internal backlinks, and social media integrations, to ensure comprehensive discoverability. HTTP header information is included to review server responses. This functionality is essential for webmasters looking to optimize their pages in line with how Google’s crawler would assess them.

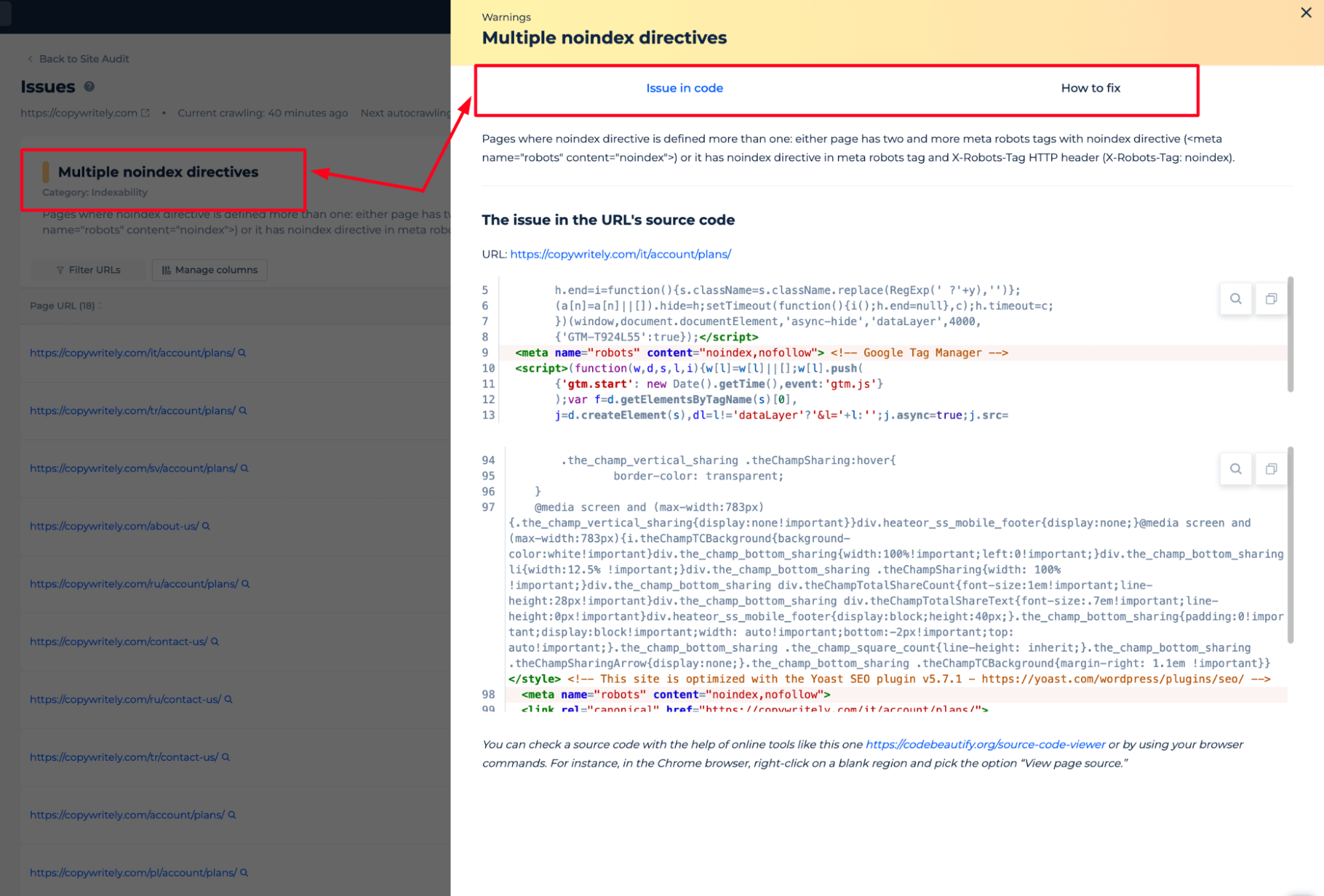

The Google Spider Simulator provides a thorough analysis of each error. It allows to see the exact location of the error within the code and offers tailored advice on how to rectify these issues effectively. This holistic approach ensures that users not only recognize the problems but also understand the steps required to improve their website’s functionality and SEO performance.

Additional Features

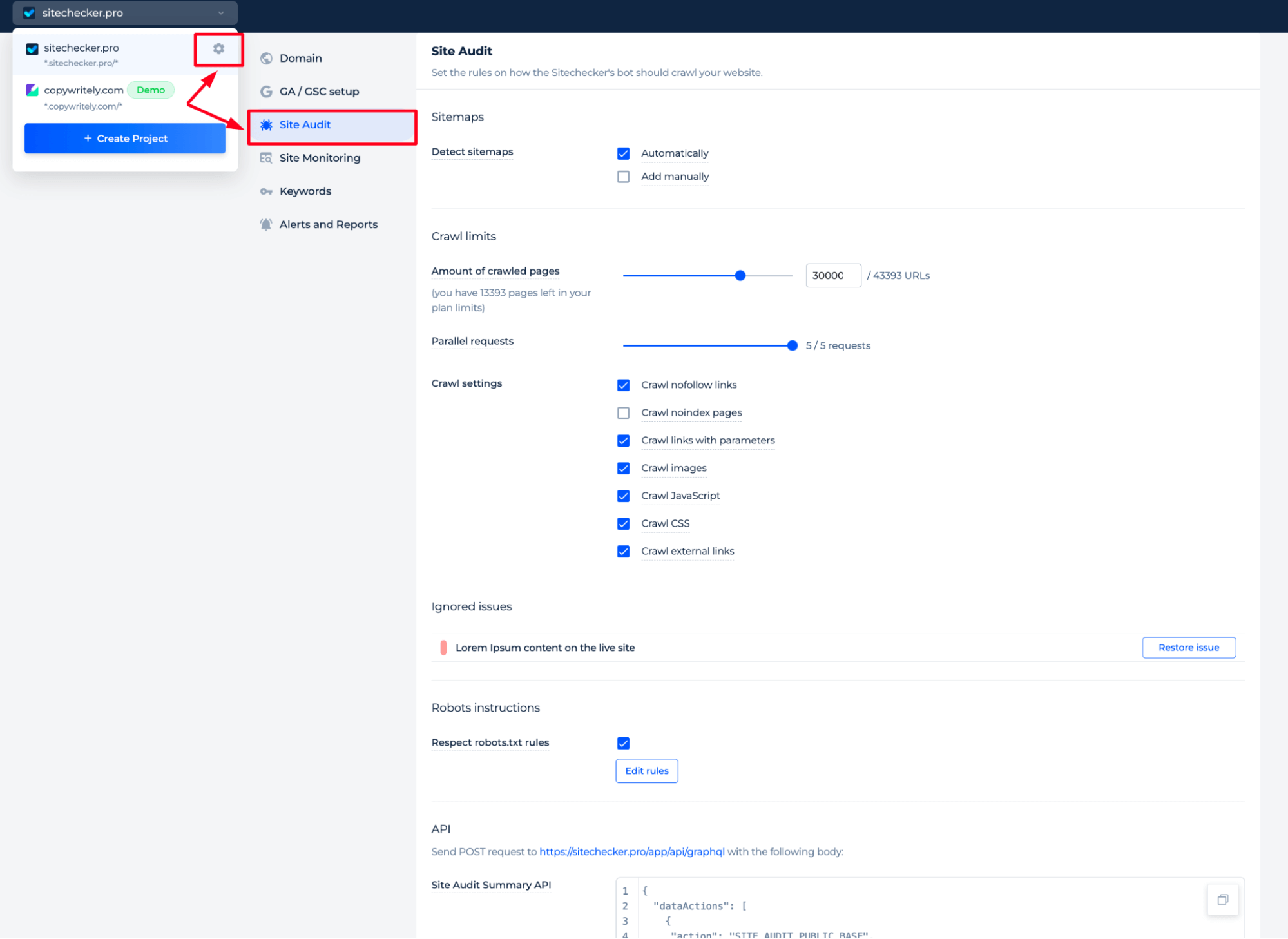

In the project settings section, you can find configurations that can be adapted to the sewing process of the search engine simulator.

Within the “Crawl Limits” section, you can set the number of pages to be crawled and the number of parallel requests, which determine the audit’s breadth and pace. The “Crawl Settings” offer a range of toggles for personalizing the crawl, including the ability to crawl nofollow links, noindex pages, and pages with parameters, as well as images, JavaScript, CSS, and external links.

The “Ignored Issues” section allows you to enumerate specific issues for the crawler to disregard. In the “Robots Instructions” area, there is an option to adhere to the directives found in the website’s robots.txt file.

At the bottom, the API section details the process for sending POST requests to Sitechecker’s API endpoint, illustrating the tool’s ability to integrate with other systems or to support custom workflows.

Final Idea

The Search Engine Crawler Simulator is an essential tool for enhancing website SEO. It simulates how search engines crawl and index a site, identifying errors like broken links, poor site structure, redirect issues, slow loading times, duplicate content, and mobile responsiveness. The tool’s dashboard presents these insights clearly, with a user-friendly interface that is suitable for all skill levels. It guides users through the SEO audit process, from entering the domain to receiving a detailed report that includes error locations and fix recommendations. This enables website owners to address issues effectively, improving their site’s search engine visibility and ranking.