Why can a page with a Noindex directive receive organic search traffic, how to check the issue and fix it?

What is noindex tag and organic traffic?

Noindex is the name of a tag used to prevent search engines from indexing a web page. Search crawlers systematically check sites on the Internet, analyze data, determine the authority and relevance of URLs for queries, and add them to the index. Indexed URLs appear in SERPs. User conversions from search results are organic traffic.

Every site has certain pages with information that is not intended for users. To prevent such pages from being indexed, it is necessary to provide search engine crawlers with an appropriate directive. If they find a Noindex directive in an HTML code or header, Google bots will not display it in search results.

What triggers this issue?

Sometimes, webmasters see in Search Console that a page closed from indexing still generates organic traffic. The most common causes of this issue:

- The URL was previously subject to indexing. Too little time has passed after the Noindex value was added, and search engine data on indexing have not yet been updated. A page with a Noindex directive will be blocked in search after the information is scanned by search crawlers.

- The site has internal links leading to the Noindex page.

- File robots.txt, which contains information about pages closed from indexing, is not accessible for Google bots, and they do not see the Noindex meta tag.

How to check the issue?

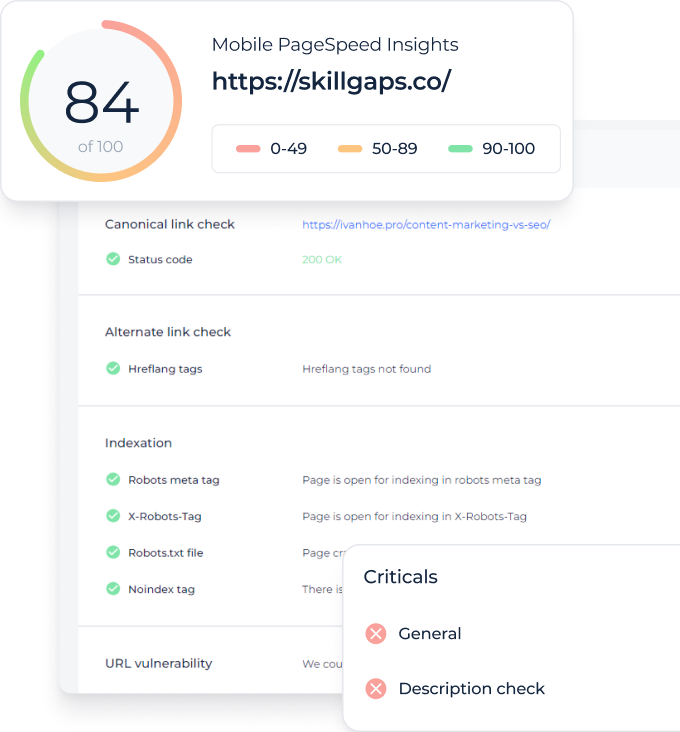

If an error occurred, first of all, check the URL for blocked indexing. It is possible that the Noindex tag has not been added, or it is not properly formatted. To check which pages are marked with the Noindex directive, you can use the Index Coverage report in Google Search Console. See the official video from Google to find out how this tool works and what features it has to offer. The report contains detailed information about the indexing of pages on the site, which can be used to determine the state of indexing.

Noindex page receiving organic traffic check is good for your website but not enough to get good SERP positions!

To detect not only the issue but other kind of site level and page level problems, just make the full site audit.

Why is this important?

Usually, the tag is used for pages with service or technical information that should not be displayed for search queries. It prevents search crawlers from scanning Noindex pages, hence preventing them from being displayed in SERPs. Nevertheless, it will be possible to access pages via a direct link.

How to fix the issue?

To prevent display in search results, the Noindex tag is added to the HTML code of the page in thesection, or the header of the HTTP response is set to Noindex or none.A description and examples of using the Noindex tag can be found in Google’s guidelines for developers:

https://developers.google.com/search/docs/advanced/crawling/block-indexing

Blocking page-level indexing with a meta tag in the <head> section:

<meta name=”robots” page-1=”noindex”>

A directive to search engine crawlers to not show the page in search results is added to the HTML code.

Blocking indexing with HTTP response header:

(…)

X-Robots-Tag: noindex

(…)

A hint to search robots can be added only to the <head> section or the HTTP response header, or both options can be applied together.

Later on, if you need to cancel the blocking, you can simply delete the Noindex tag in the program code or HTTP header and send pages for indexing.